B2C (Dating)

SafeScreen: Designing an AI Moderation System

SafeScreen: Designing an AI Moderation System

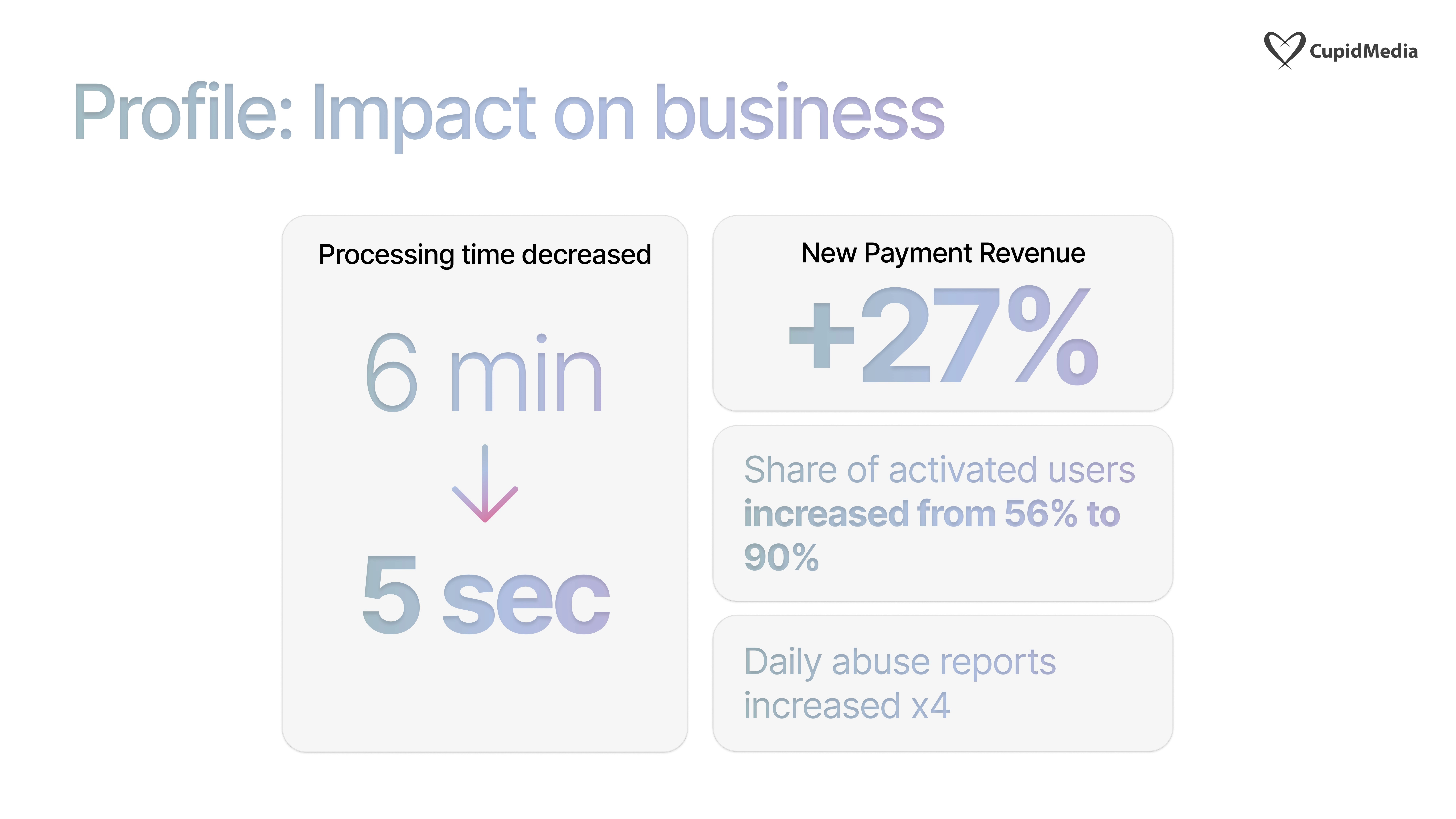

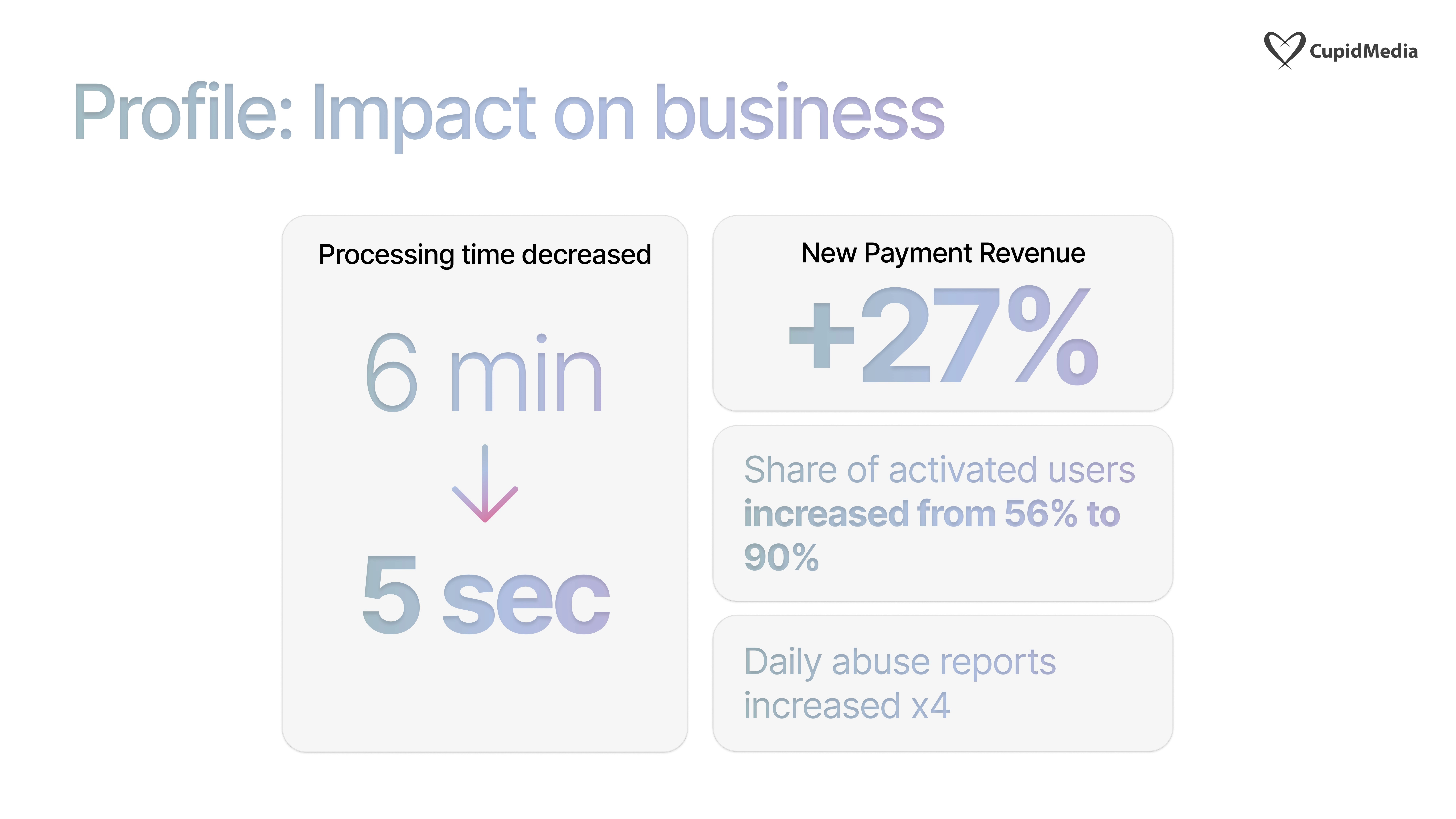

+27%

New Payment Revenue

New Payment Revenue

$260,000

Total Annual Cost Savings

Total Annual Cost Savings

+34%

+34%

User Activation Rate

User Activation Rate

Overview

This project, "Safe Screen," was a strategic initiative to replace Cupid Media's costly and inefficient manual moderation process with a streamlined, LLM-based AI system. The goal was to reduce costs, speed up content approval, and improve the new user experience, directly impacting activation and revenue.

My primary design contribution was not a visual UI, but rather the Information Architecture and Systems Logic for the new AI model. I was responsible for translating hundreds of complex legacy rules into a simple, streamlined flow that the ML team could build.

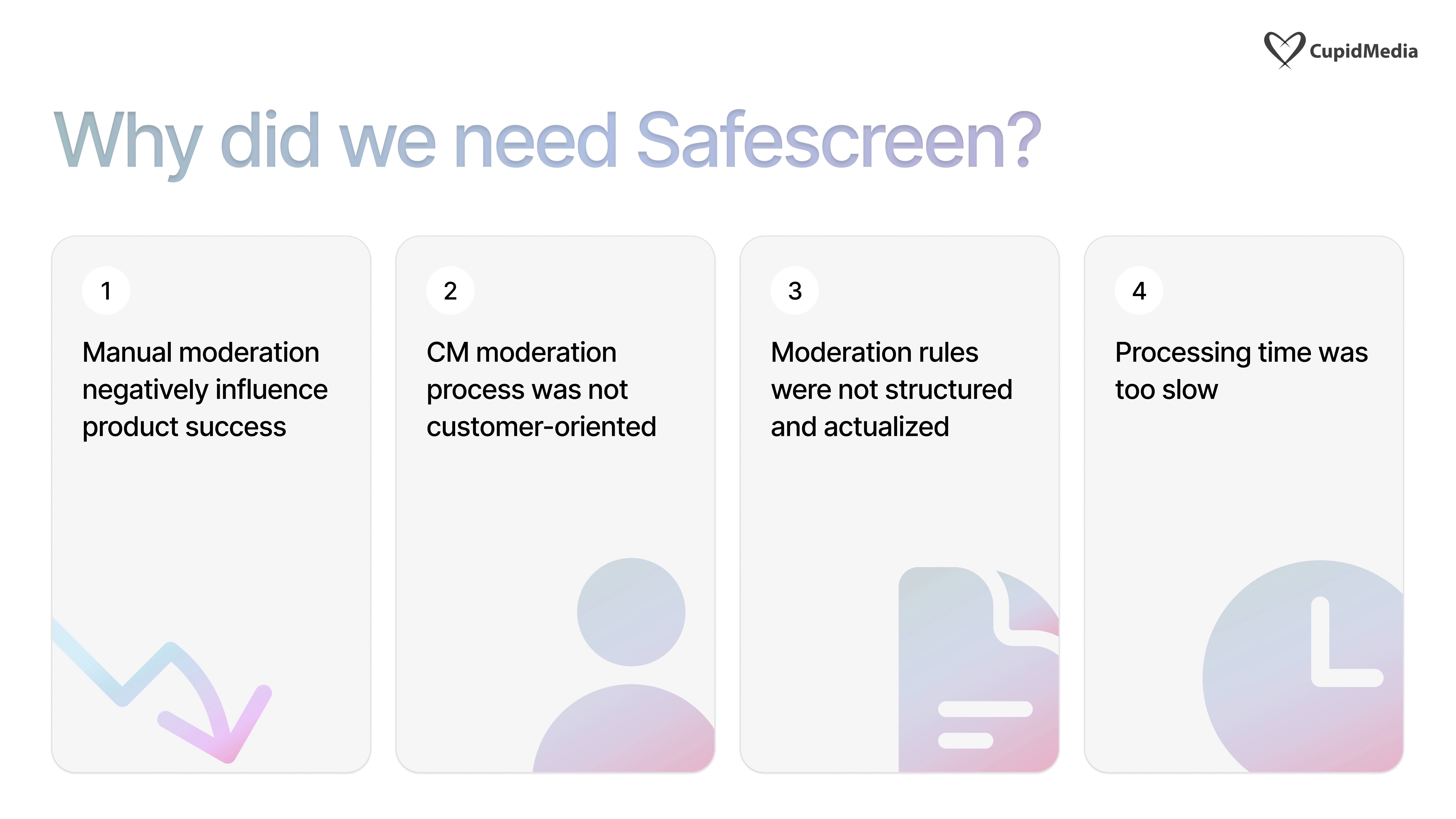

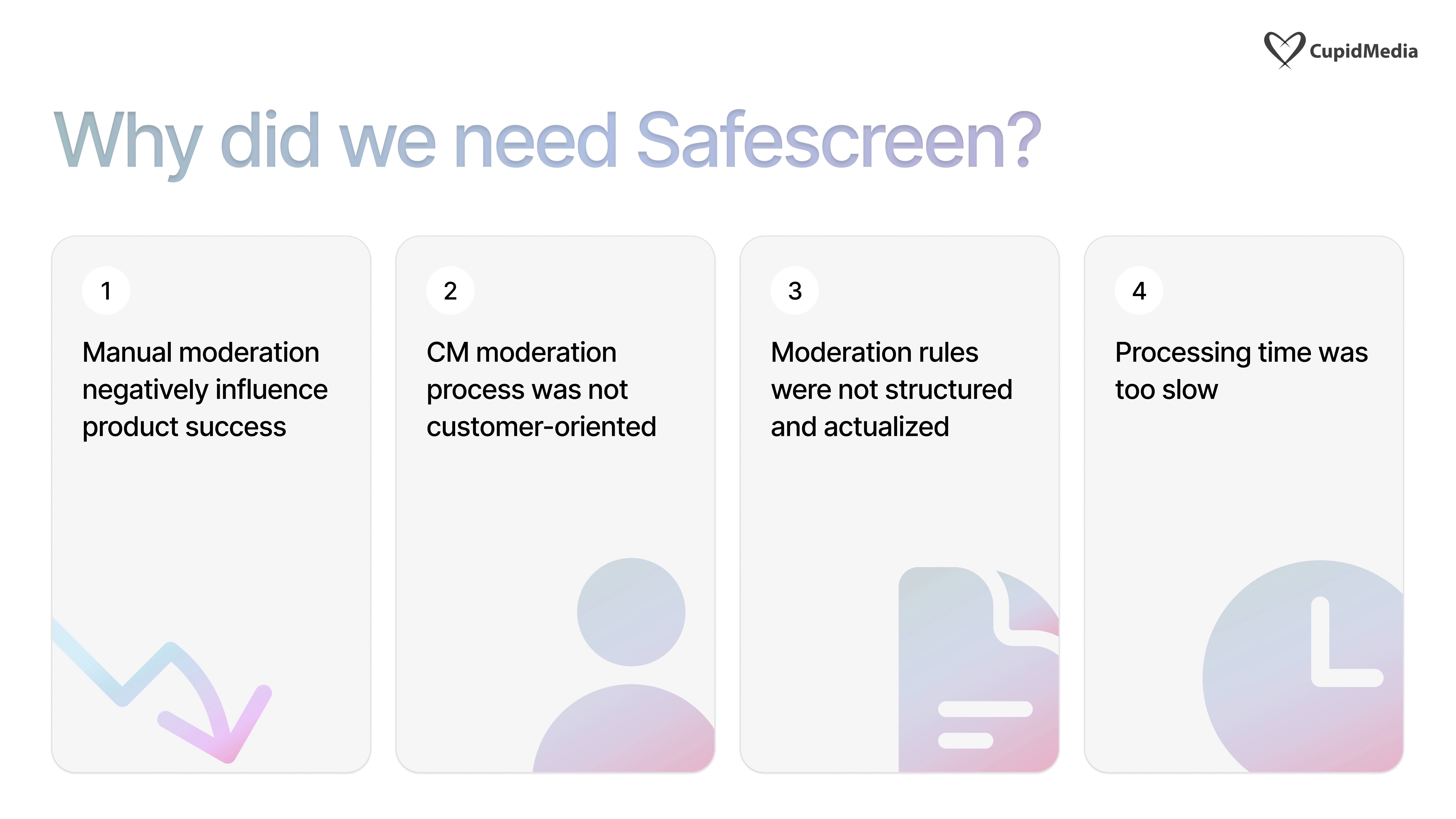

The Challenge: A Slow, Costly, & Unstructured System

The existing manual moderation system was a major business bottleneck.

The Solution: A Phased AI Moderation Strategy

We tackled this by breaking the project into three core components:

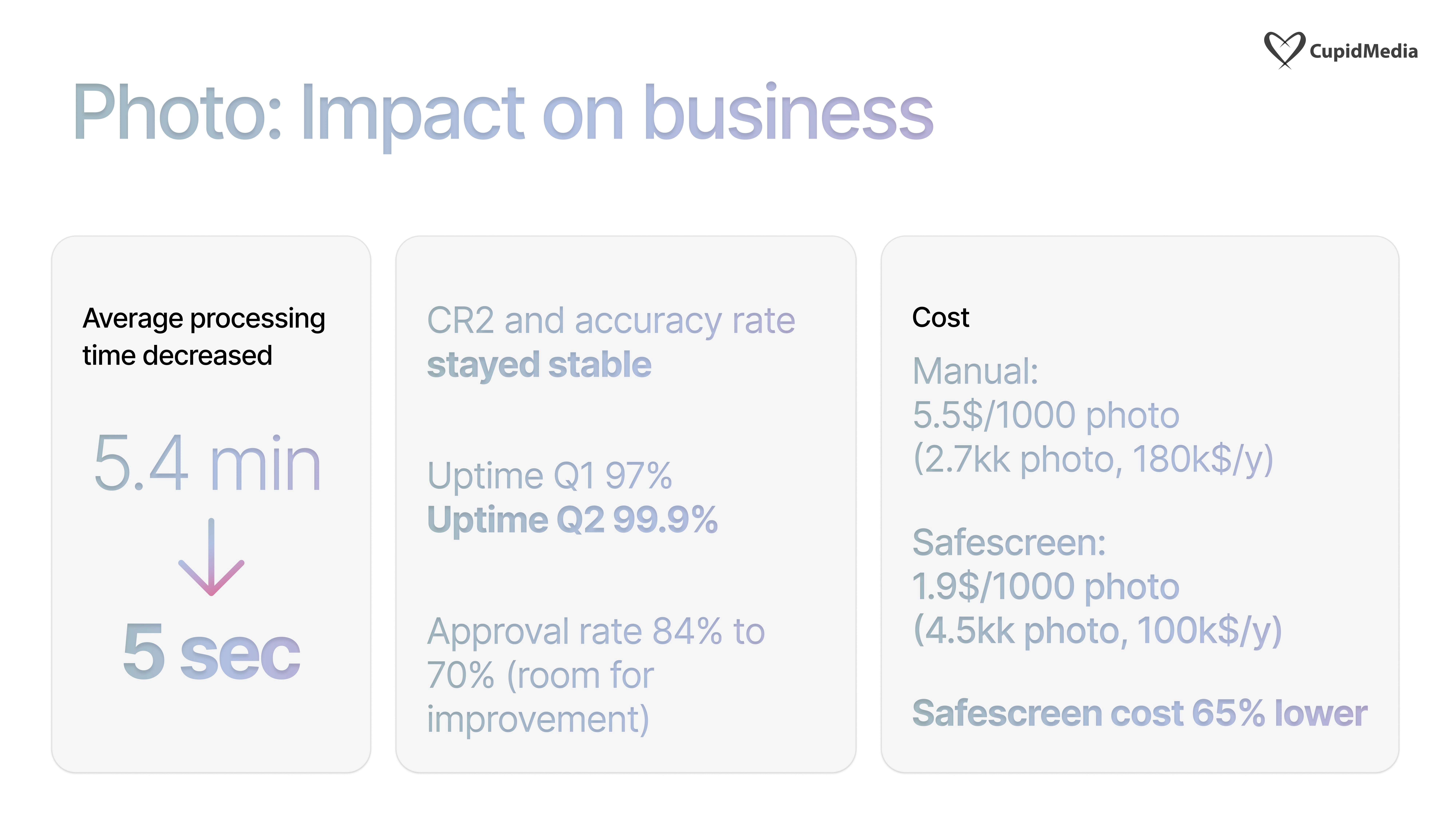

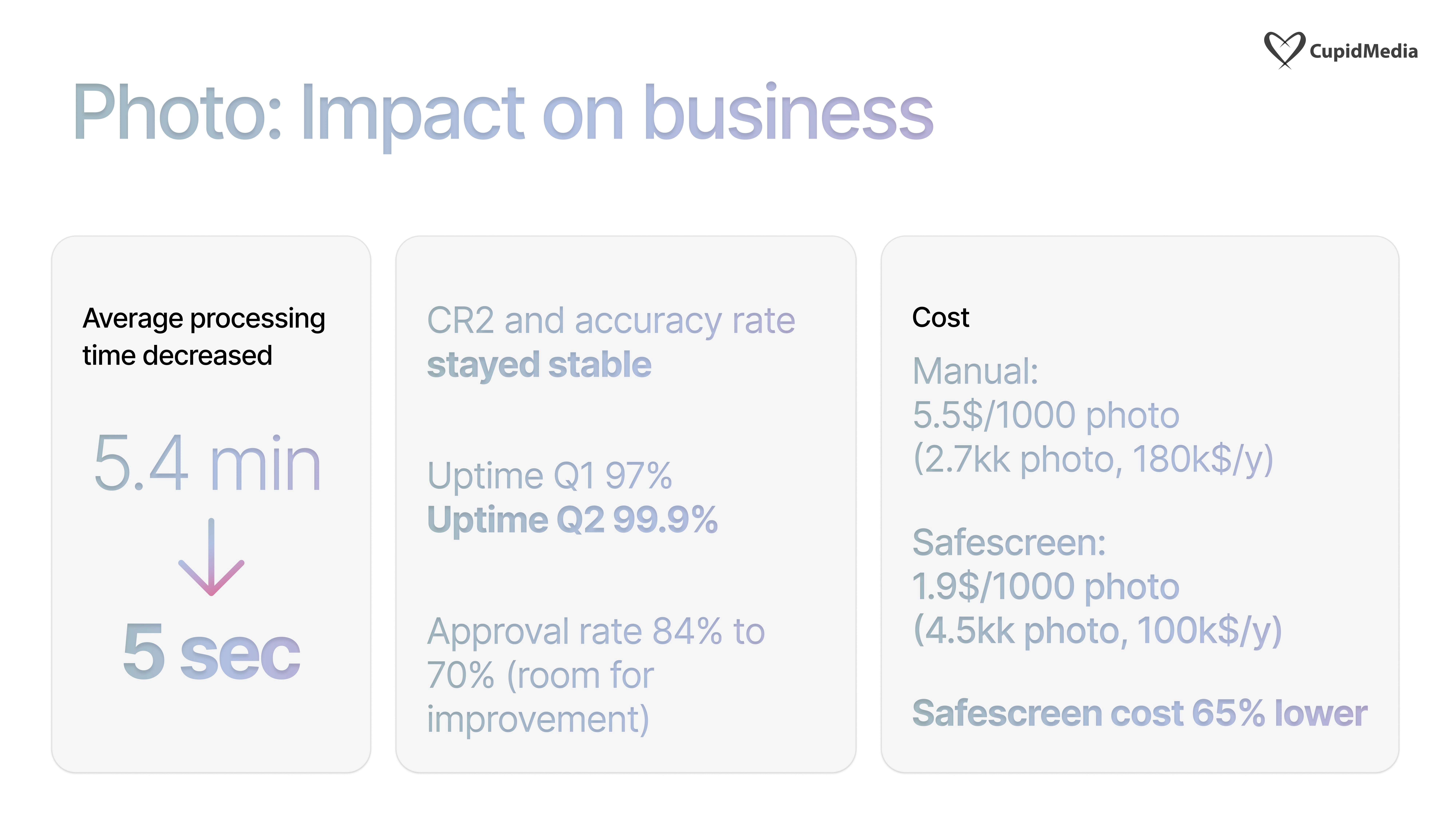

1. Photo Moderation (Shipping Speed & Reducing Cost)

The first step was to automate photo approvals to deliver our service to new users faster.

We deployed an AI model to handle 100% of photo moderation.

2. Profile Moderation (Driving Revenue & Activation)

Next, we automated the text-based profile moderation (bios, prompts, etc.) to get users fully activated.

This was a complex task. I worked with the ML team to refine hundreds of legacy rules down to just 8 streamlined, LLM-based logic rules. This allowed us to turn off expensive legacy tools.

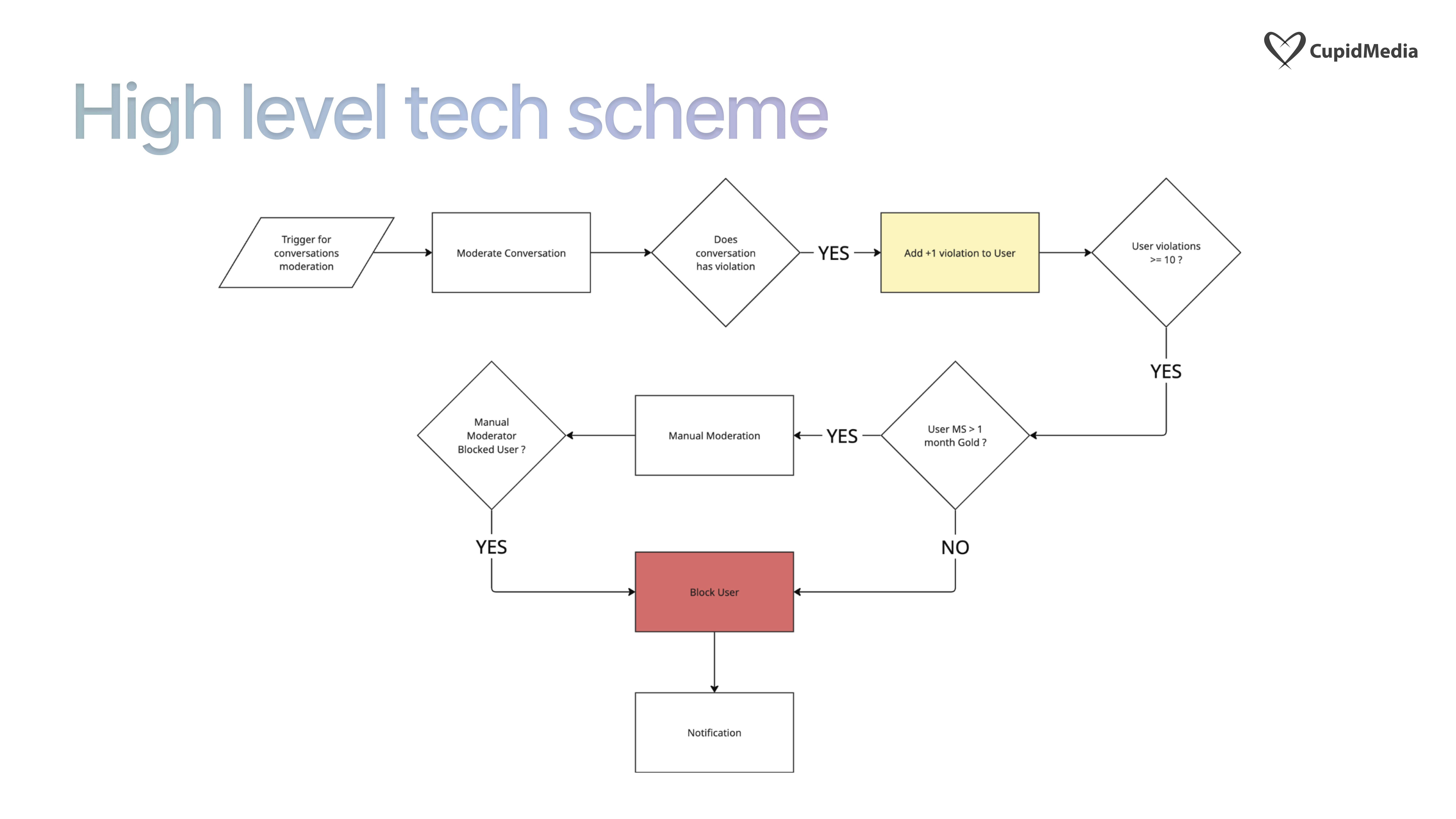

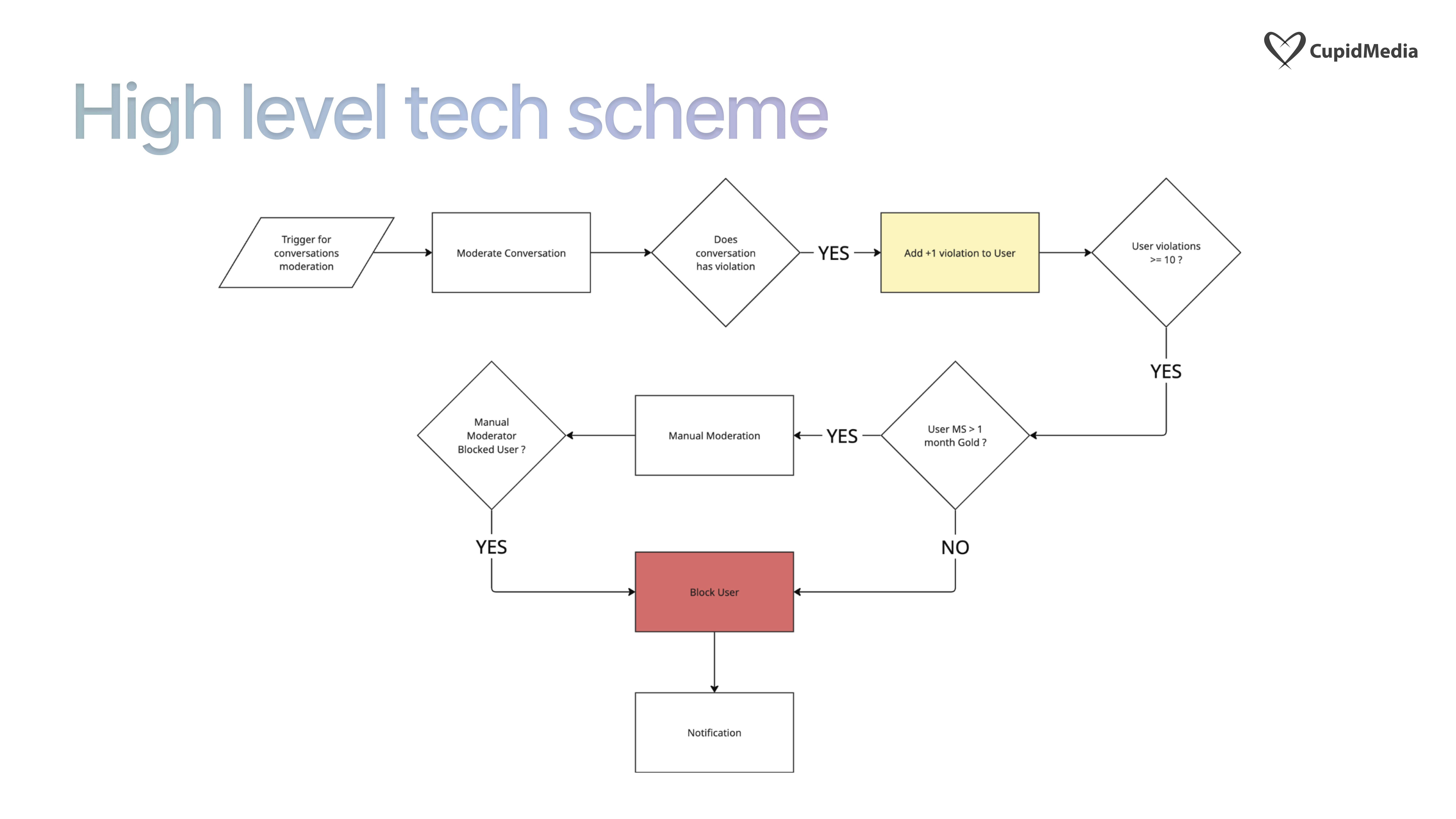

3. Conversation Moderation (Future Vision)

The final phase (in progress as I transitioned) was to apply this AI logic to user-to-user chat to proactively catch fraud and abuse, improving retention and user safety.

Challenges & Key Learnings

This project was not without its challenges, which provided valuable lessons:

Metrics Tell the Whole Story. After launch, we saw a 4x increase in daily abuse reports. While we increased the number of activated users on our platform, we now faced a challenge of letting too many fraudsters join unnoticed.

AI is an Iterative Process. Our new photo model had a lower initial approval rate (70% vs 84%). This wasn't a failure, but a baseline. It highlighted the need for continuous training and a clear "human-in-the-loop" process for edge cases.

Overview

This project, "Safe Screen," was a strategic initiative to replace Cupid Media's costly and inefficient manual moderation process with a streamlined, LLM-based AI system. The goal was to reduce costs, speed up content approval, and improve the new user experience, directly impacting activation and revenue.

My primary design contribution was not a visual UI, but rather the Information Architecture and Systems Logic for the new AI model. I was responsible for translating hundreds of complex legacy rules into a simple, streamlined flow that the ML team could build.

The Challenge: A Slow, Costly, & Unstructured System

The existing manual moderation system was a major business bottleneck.

The Solution: A Phased AI Moderation Strategy

We tackled this by breaking the project into three core components:

1. Photo Moderation (Shipping Speed & Reducing Cost)

The first step was to automate photo approvals to deliver our service to new users faster.

We deployed an AI model to handle 100% of photo moderation.

2. Profile Moderation (Driving Revenue & Activation)

Next, we automated the text-based profile moderation (bios, prompts, etc.) to get users fully activated.

This was a complex task. I worked with the ML team to refine hundreds of legacy rules down to just 8 streamlined, LLM-based logic rules. This allowed us to turn off expensive legacy tools.

3. Conversation Moderation (Future Vision)

The final phase (in progress as I transitioned) was to apply this AI logic to user-to-user chat to proactively catch fraud and abuse, improving retention and user safety.

Challenges & Key Learnings

This project was not without its challenges, which provided valuable lessons:

Metrics Tell the Whole Story. After launch, we saw a 4x increase in daily abuse reports. While we increased the number of activated users on our platform, we now faced a challenge of letting too many fraudsters join unnoticed.

AI is an Iterative Process. Our new photo model had a lower initial approval rate (70% vs 84%). This wasn't a failure, but a baseline. It highlighted the need for continuous training and a clear "human-in-the-loop" process for edge cases.